“Programming a computer to be clever is harder than programming it to learn to be clever,” Hugo Larochelle, a researcher at Google Brain and adjunct professor at Université de Sherbooke, said during his “Beyond Artificial Intelligence: Deep Learning” presentation at SUS Academia Week on Jan. 31.

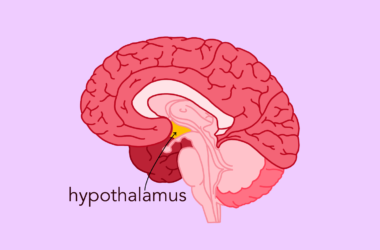

Deep learning is a subset of Artificial Intelligence (AI) that attempts to mimic the human brain’s ability to comprehend abstract notions. For example, when a human sees a dog, even if it is an unfamiliar breed, they are often still able to recognize the creature as a dog. This is likely because they have seen many dogs before, and their brain has developed a complex algorithm that runs through a series of checklist questions: Does it walk on four legs? Does it have a snout? Does it have a tail it can wag? If it only has one eye, is it a dog with a missing eye or a different creature altogether?

As part of his presentation, Larochelle explained the science behind deep learning where artificial networks of ‘neurons’ are grouped into various layers, each dedicated to processing a particular piece of information. In the case of recognizing a dog, each of the initial questions could be a layer, with one layer considering whether the creature walks on four legs and another considering whether it has a snout. Larochelle’s team aims to provide a deep learning machine with a rich diversity of similar problems in the hopes of training the system to learn a new recognition task faster.

While researchers have studied neural networks and deep learning for over 50 years, the recent buzz around the topics is attributable to the improvement in technology infrastructure and the ability to give machines mass amounts of high-quality training data. Canada, in particular, has led the way in the recent AI advancements. Industry pioneers, such as McGill alumnus Yoshua Bengio under whom Larochelle was a student, are responsible for many of novel AI innovations. Bengio developed a revolutionary language translation system that now forms the basis of Google Translate.

Despite these breakthroughs, Larochelle believes that we still have a long way to improve the efficiency of AI and deep learning.

“A computer is essentially like a really, really dedicated student that will do lots of exercises but is sort of dumb and not learning particularly fast,” Larochelle said.

Deep learning technology has the potential to be applicable in a variety of industries. Larochelle described a particular case in the agricultural industry wherein an engineer trained a neural network system to recognize the shape and size of cucumbers, so that they could be run through an automated process instead of sorted manually. Applications for deep learning can even be found in art, including in musical composition and in tools such as the website deepart.io, where users can upload photographs to transform them into paintings of various styles.

The powerful potential of deep learning also comes with great responsibility.

“We have to be mindful about biases the systems might have that often will come from badly collected data,” Larochelle said.

To illustrate his point, Larochelle described cases where facial recognition software was better at detecting white males than women or people of colour, due to the unrepresentative data sets which the system received while in training.

The opportunity to learn about the rapidly-growing and increasingly-influential industry of deep learning was exciting for students in attendance.

“We’re really lucky to get to see such people speak at McGill and to get an idea of what we can do with our degrees,” Lily Carson, Arts student, said.